As part of my “Get Ready for Senior Tech Interviews” I was looking at Hacker Rank Questions and parsing through diagnoals of a Matrix Came up.

Obviously the “I am at work, not in an interview way is to use numpy, diag” However this it about understanding the iterations instead

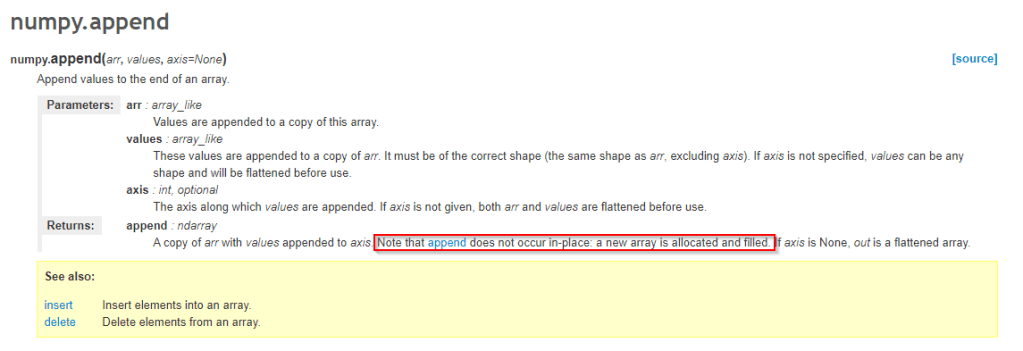

Guide to Finding Matrix Diagonals <!– HOW TO USE IN WORDPRESS: 1. Edit a page or post in WordPress. 2. Add a "Custom HTML" block where you want this guide to appear. 3. Copy everything from the tag below to the closing tag… 4. …and paste it directly into the Custom HTML block. 5. The guide should now render correctly on your WordPress site. –> https://cdn.tailwindcss.com body { font-family: ‘Inter’, sans-serif; } .matrix-grid { display: grid; gap: 0.5rem; } .matrix-grid.grid-3 { grid-template-columns: repeat(3, minmax(0, 1fr)); } .matrix-grid.grid-4 { grid-template-columns: repeat(4, minmax(0, 1fr)); } .matrix-cell { width: 4rem; /* 64px */ height: 4rem; /* 64px */ display: flex; align-items: center; justify-content: center; font-size: 1.25rem; /* 20px */ font-weight: 700; border-radius: 0.5rem; /* 8px */ background-color: rgb(229 231 235); color: rgb(31 41 55); transition-property: all; transition-timing-function: cubic-bezier(0.4, 0, 0.2, 1); transition-duration: 300ms; } .highlight-path { background-color: rgb(16 185 129); color: #fff; box-shadow: 0 10px 15px -3px rgb(0 0 0 / 0.1), 0 4px 6px -4px rgb(0 0 0 / 0.1); transform: scale(1.1); } .highlight-path-main { background-color: rgb(99 102 241); color: #fff; box-shadow: 0 10px 15px -3px rgb(0 0 0 / 0.1), 0 4px 6px -4px rgb(0 0 0 / 0.1); transform: scale(1.1); } .highlight-row { /* Using border instead of ring for better compatibility */ border: 4px solid rgb(56 189 248); } .highlight-col { /* Using border instead of ring for better compatibility */ border: 4px solid rgb(236 72 153); } .code-block { background-color: rgb(17 24 39); color: #fff; padding: 1rem; border-radius: 0.5rem; font-family: ui-monospace, SFMono-Regular, Menlo, Monaco, Consolas, “Liberation Mono”, “Courier New”, monospace; font-size: 0.875rem; /* 14px */ overflow-x: auto; }Finding Matrix Diagonals

An Illustrated Guide to the Python Techniques

1. The Main Diagonal (Top-Left to Bottom-Right)

The Core Rule

For the main diagonal, the rule is the simplest. An element is on the main diagonal if its row index is equal to its column index.

row_index == column_index

The Python Code

# For the main diagonal, we use enumerate to get the index 'i'

main_diagonal = [row[i] for i, row in enumerate(matrix)]

# Or, more simply with a range

main_diagonal = [matrix[i][i] for i in range(n)]

# For matrix = [[10,20,30],[40,50,60],[70,80,90]], result is [10, 50, 90]

Interactive Example: Main Diagonal

Click through the steps. Here, n = 3 and we look for elements where row_index == col_index.

Resulting List:

2. The Anti-Diagonal (Top-Right to Bottom-Left)

The Core Rule

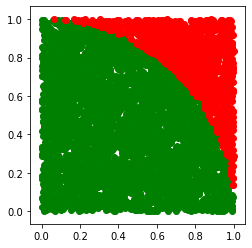

For the anti-diagonal, the sum of an element’s row index and column index is always equal to n - 1.

row_index + column_index = n – 1

The Python Code

# For the anti-diagonal, we use the formula: col = n - 1 - row

anti_diagonal = [matrix[i][n - 1 - i] for i in range(n)]

# For matrix = [[10,20,30],[40,50,60],[70,80,90]], result is [30, 50, 70]

Interactive Example: Anti-Diagonal

Click through the steps. Here, n = 3, so the target index sum is n - 1 = 2.

Resulting List:

Finished!

The loop is complete and the final list has been generated.

`; nextStepBtn.disabled = true; nextStepBtn.classList.add(‘opacity-50’, ‘cursor-not-allowed’); return; } const { i, j, value } = config.logic(currentStep); result.push(value); for (let k = 0; k { currentStep++; updateStep(); }); resetBtn.addEventListener(‘click’, () => { currentStep = -1; result.length = 0; resetHighlights(); stepInfoContainer.innerHTML = `Step 0: Start

Click “Next Step” to begin the loop.

`; resultList.textContent = ‘[ ]’; nextStepBtn.disabled = false; nextStepBtn.classList.remove(‘opacity-50’, ‘cursor-not-allowed’); }); createMatrix(); resetBtn.click(); } // — Configuration for Main Diagonal — setupInteractiveExample({ prefix: ‘main’, matrixId: ‘main-interactive-matrix’, stepInfoId: ‘main-step-info’, resultListId: ‘main-result-list’, nextBtnId: ‘main-next-step-btn’, resetBtnId: ‘main-reset-btn’, highlightClass: ‘highlight-path-main’, logic: (step) => ({ i: step, j: step, value: matrixData[step][step] }), explanation: (i, j, value) => `Step ${i + 1}: (i = ${i})

The rule is row == col, so we get the element at matrix[${i}][${i}].

Value: ${value}

Step ${i + 1}: (i = ${i})

Calculate column: j = n-1-i => ${n}-1-${i} => ${j}.

Coordinates: matrix[${i}][${j}]

Value: ${value}